Machine learning: Definition and Types

In the rapidly evolving field of technology today, “machine learning” is becoming a very popular term. It’s changing how we live and work, from recommendation engines to self-driving automobiles. However, what is machine learning really, and how does it operate? We shall examine the many aspects of machine learning in this article and provide insight into its uses, algorithms, and other aspects.

Table of Contents

ToggleDefinition of Machine learning

Within the subject of artificial intelligence (AI), machine learning employs algorithms that have been trained on data sets to generate self-learning models that can classify information and predict events without the need for human interaction. These days, machine learning is applied to a broad range of commercial applications, such as translating text between languages, predicting stock market swings, and making product recommendations to customers based on their previous purchases.

Because machine learning is so widely employed in the modern world for AI objectives, the phrases “machine learning” and “artificial intelligence” are frequently used synonymously. The two terms are, nevertheless, fundamentally different. Machine learning, as opposed to artificial intelligence (AI), refers explicitly to the application of algorithms and data sets to the general goal of creating computers with cognitive capacities similar to those of humans.

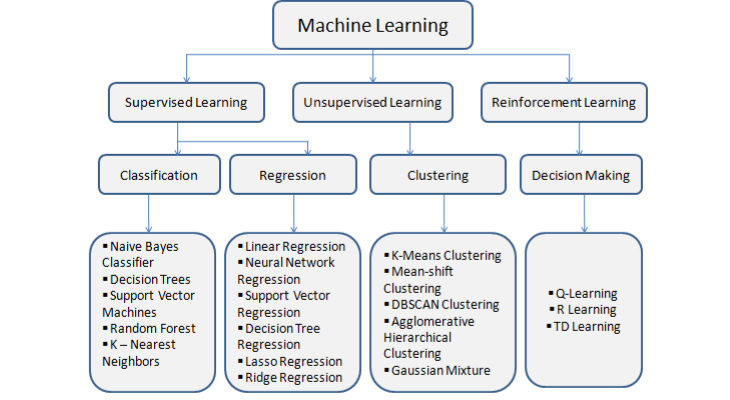

Types of machine learning

The many diverse digital products and services we use on a daily basis are powered by several forms of machine learning. The specific techniques used by each of these categories varies slightly, but they all aim to achieve the same objectives—building devices and applications that can function without human supervision.

Here’s an overview of the four main forms of machine learning that are now in use to help you understand how they differ from one another.

1. Supervised machine learning

Algorithms are trained on labeled data sets with tags that describe each item of data in supervised machine learning. Stated differently, the algorithms receive data that is accompanied by a “answer key” that provides guidance on how to interpret the data. To improve its ability to recognize flowers when presented with fresh photographs, an algorithm might be fed images of flowers that have tags for each type of flower.

Machine learning models for categorization and prediction are frequently developed via supervised machine learning.

2. Unsupervised machine learning

Algorithms are trained using unlabeled data sets in unsupervised machine learning. The program must find patterns on its own without external assistance because it is fed data without tags during this procedure. For example, to find trends in user behavior on a social media platform, an algorithm may be fed a lot of unlabeled user data that was taken from the site.

To swiftly and effectively find patterns in massive, unlabeled data sets, researchers and data scientists frequently employ unsupervised machine learning.

3. Semi-supervised machine learning

Algorithms are trained using both labeled and unlabeled data sets in semi-supervised machine learning. In semi-supervised machine learning, algorithms are typically fed significantly greater amounts of unlabeled data to finish the model after first receiving a small quantity of labeled data to guide their development. To build a machine learning model that can recognize voice, for instance, an algorithm might first be fed a smaller amount of labeled speech data and then trained on a much larger set of unlabeled speech data.

When a significant amount of labeled data is unavailable, semi-supervised machine learning is frequently used to train algorithms for classification and prediction applications.

4. Reinforcement learning

Reinforcement learning trains algorithms and builds models through trial and error. Algorithms work in particular surroundings during the training phase, and after each result, they receive feedback. The algorithm gradually gains awareness of its surroundings and starts to optimize behaviors to accomplish certain goals, much like a toddler learning. For example, an algorithm can be made more efficient by playing chess games one after the other. This way, the algorithm can learn from its previous mistakes and victories.

Reinforcement learning is frequently used to develop algorithms that, in order to accomplish tasks like playing a game or summarizing a text, must efficiently make a series of decisions or actions.

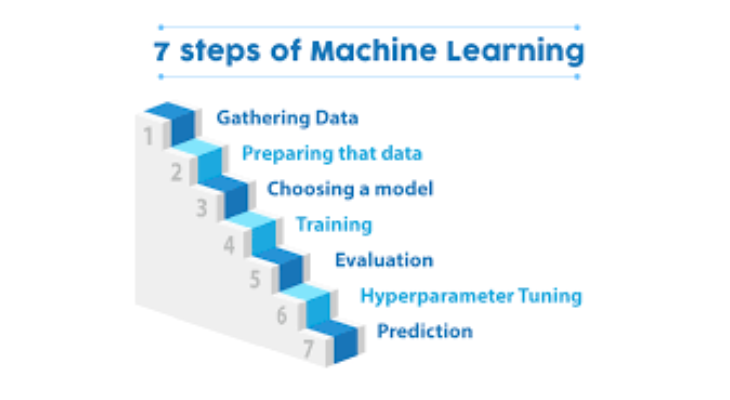

There Are Seven Machine Learning Steps

- Gathering Data

- Preparing that data

- Choosing a model

- Training

- Evaluation

- Hyperparameter Tuning

- Prediction

Learning a programming language—Python is preferred—as well as the necessary analytical and quantitative skills are prerequisites. Before tackling Machine Learning problems, you should review the following five mathematical topics:

- Data processing using linear algebra: matrices, tensors, vectors, and scalars

- Gradients and Derivatives in Mathematical Analysis

- Statistics and Probability Theory for Machine Learning

- Numerous-variable Calculus

- Formulas and Intricate Optimizations

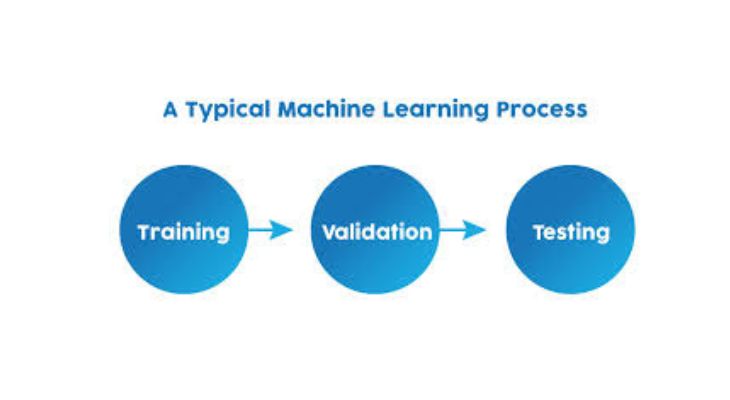

How does Machine Learning work?

A system’s three main components are its model, its parameters, and its learner.

- A model is a system that generates predictions.

- The variables that the model takes into account in order to generate predictions are called parameters.

- In order to match the predictions with the actual outcomes, the learner modifies the model’s parameters.

To further understand how machine learning functions, let’s expand on the beer and wine example from earlier. The task at hand requires a machine learning model to identify if a beverage is wine or beer. The drink’s color and its percentage of alcohol content are the chosen criteria. Initially, you must:

1. Learning from the training set

In order to do this, a sample data set of multiple beverages with defined colors and alcohol percentages is taken. The description of each classification—wine and beer, for example—must now be defined in terms of the parameter values for each kind. The description can be used by the model to determine whether a new beverage is a wine or beer.

For the parameters “color” and “alcohol percentages,” you can use the characters “x” and “y,” respectively, to represent the values. In the training data, each drink’s parameters are defined by (x,y). A training set is a collection of data like this one. When these data are graphed, a hypothesis that best fits the intended outcomes can be shown as a line, a rectangle, or a polynomial.

2. Measure error

After the model has been trained on a predetermined training set, it must be examined for inconsistencies and mistakes. We achieve this goal using a new collection of data. The outcome of this test would be one of these four:After the model has been trained on a predetermined training set, it must be examined for inconsistencies and mistakes. We achieve this goal using a new collection of data. The outcome of this test would be one of these four:

- When the model accurately forecasts the situation when it exists, it is a true positive.

- True Negative: When a condition is lacking and the model does not forecast it

- A false positive occurs when the model predicts a situation that doesn’t exist.

- False Negative: When a condition is present but the model does not forecast it

The entire model error is equal to the sum of FP and FN.

3. Manage Noise

To tackle this machine learning challenge, we have merely taken into account the color and alcohol percentage as the only two parameters for simplicity’s sake. However, in practice, solving a machine learning problem will need taking into account hundreds of parameters and a large collection of learning data.

Because of the noise, the hypothesis that is subsequently developed will have many more errors. Unwanted anomalies, or noise, mask the underlying relationship in the data set and impair learning. There are several causes of this noise, including:

- large-scale training set

- errors in the data input

- Data labeling mistakes

Unobservable characteristics that could have an impact on classification but are not taken into account in the training set because of insufficient data

To keep the hypothesis as basic as feasible, you can allow a certain amount of noise-induced training error.

4. Testing and Generalization

An algorithm or hypothesis may perform well when applied to a training set of data, but it may not perform well when applied to another set of data. Determining whether the algorithm is appropriate for fresh data is therefore crucial. This can be evaluated by testing it with a fresh batch of data. The ability of the model to forecast results for a fresh batch of data is sometimes referred to as generalization.

When a hypothesis method is fitted for maximum simplicity, it may process new data with more substantial error than it does for the training set. We refer to this as underfitting. Nonetheless, the hypothesis may not generalize well if it is too intricate to provide the best fit to the training set. An example of over-fitting is this. The outcome feeds back into the model to further train it in either scenario.

Which Language is Best for Machine Learning?

For Machine Learning applications, Python is the greatest programming language by far because of the many advantages listed in the section below. The following additional programming languages could be used: Scala, R, Julia, C#, C++, JavaScript, and C#.

Python is well-known for being easier to read and having less complexity than other programming languages. Complex ideas like calculus and linear algebra are used in machine learning applications, and their implementation requires a lot of time and work. Python eases this load by enabling the ML engineer to quickly validate an idea through implementation. For an introduction to the language, you might look at the Python Tutorial. The pre-built libraries in Python are an additional advantage. As listed below, there are various packages for various kinds of applications:

- Using Numpy, OpenCV, and Scikit when interacting with images

- When handling text, use NLTK once more in conjunction with Numpy and Scikit.

- Librosa for audio applications

- For data representation, use Scikit, Seaborn, and Matplotlib.

- Pytorch with TensorFlow for Deep Learning Uses

- Scientific Computing with Scipy

- Using Django to integrate web apps

- Pandas for advanced data analysis and structures

Conclusion

We have walked through the complex realm of machine learning in this extensive tutorial, covering everything from basic ideas to sophisticated algorithms, data preprocessing, and ethical issues. Understanding and utilizing machine learning’s potential is essential to being relevant in the current digital era as technology develops. You will therefore be well on your way to becoming an expert in the field of machine learning if you continue to explore, learn, and innovate.

FAQs

What is Learning via Reinforcement?

Through interactions with its surroundings, an agent that uses the reinforcement learning paradigm in machine learning learns to make successive judgments. It is frequently utilized in robotics and gaming, with the goal of maximizing a reward signal.

Which machine learning algorithms are currently in use?

A variety of machine learning methods are available, such as k-nearest neighbors, decision trees, neural networks, and support vector machines. Each has advantages over the others and works best with particular kinds of issues.

What is the machine learning preprocessing method for data?

Preprocessing the data is essential for accurate modeling. Data cleaning, feature engineering, handling missing values, scaling, and dividing data into training and testing groups are some of the tasks involved.

Which machine learning evaluation measures are frequently used?

Recall, F1 score, accuracy, precision, and the receiver operating characteristic (ROC) curve are examples of common evaluation metrics. These measurements aid in evaluating the effectiveness of categorization models.

What function do libraries and frameworks for machine learning serve?

Machine learning libraries and frameworks, including PyTorch, TensorFlow, and scikit-learn, offer pre-built tools and functions to make machine learning easier for developers and data scientists to use.

What ethical issues surround machine learning?

Algorithmic justice, privacy concerns, and the effects of automation and artificial intelligence on employment are some of the ethical challenges surrounding machine learning. One major ethical concern in the field of modeling is addressing bias and discrimination.

2 Comments